In January, the Boston Public Library announced the launch of our Anti-slavery manuscript transcription website. This site was developed by a team from Zooniverse led by Dr. Samantha Blickhan. The goal of this project is to engage and enable a community of motivated citizens to help convert handwritten verse into machine readable text. This transcribed text will allow our anti-slavery manuscript collect to be more accurately searched and more powerfully researched. Below is a post from Dr. Samantha Blickhan on the status of the project.

Hi all, Sam here to give you an update on Anti-Slavery Manuscripts and give you some more information on some of the research we (Zooniverse) have been doing with this project.

The Anti-Slavery Manuscripts project was built as part of a 3-year Institute of Museum and Library Services (IMLS)-funded research grant. The grant is entitled “Transforming Libraries and Archives Through Crowdsourcing.” This project aims to explore best practice for crowdsourced transcription. This includes the development of new tools, transcription methods, and interface design. You can read about this ongoing research project in detail here.

One of the research questions in the IMLS grant was the following: “Does the current Zooniverse methodology of multiple independent transcribers and aggregation render better results than allowing volunteers to see previous transcriptions by others, and aggregating these results? How does each methodology impact the quality and depth of analysis and participation?”

To answer this question, we designed an A/B experiment for the Anti-Slavery Manuscripts project. In the experiment, we built two separate transcription workflows:

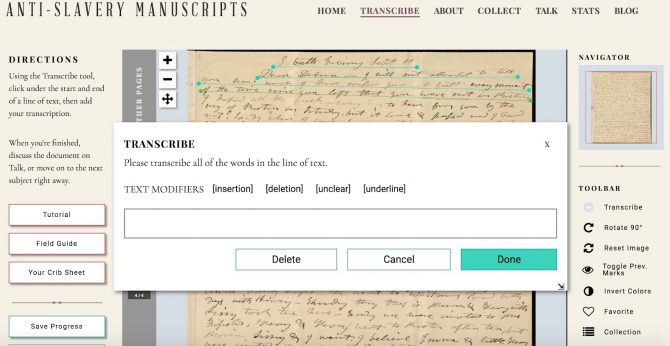

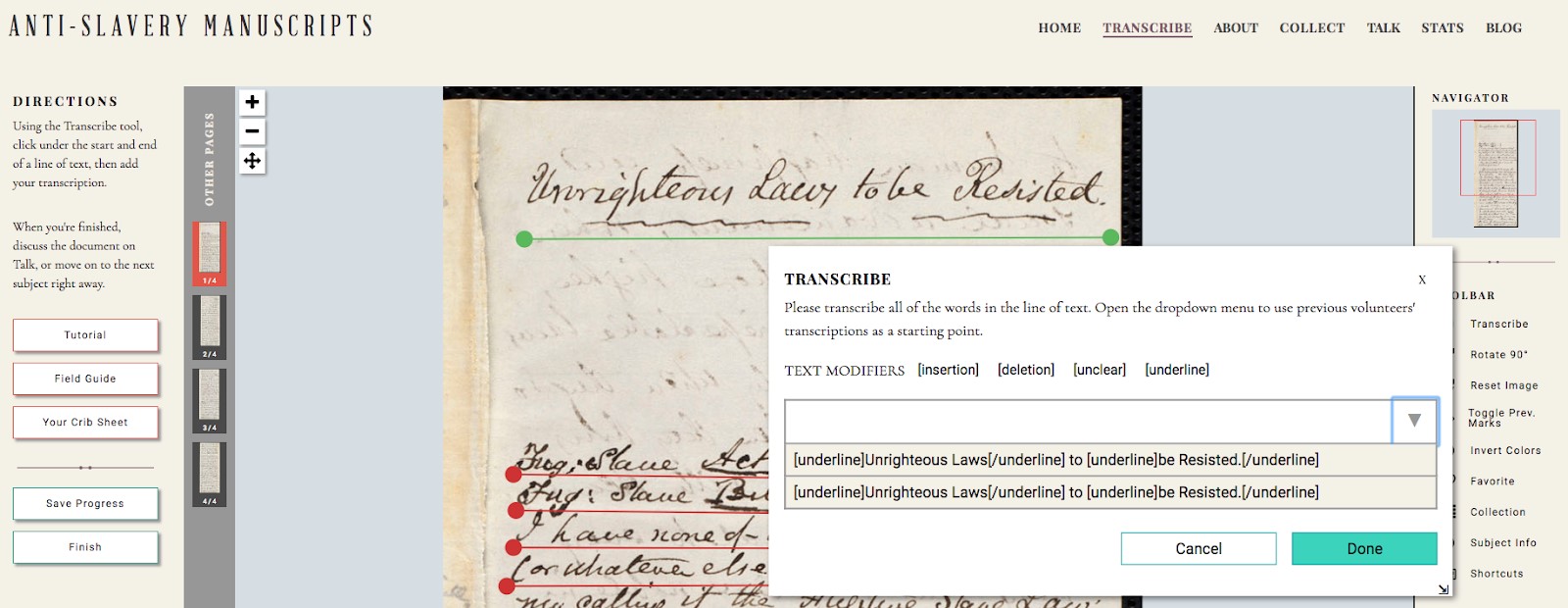

- An “independent” workflow, in which you, the volunteer community, transcribe letters independently of one another, and the results are aggregated together to make a single, “best” version. This is the same process used in previous Zooniverse transcription projects, like Shakespeare’s World (https://www.shakespearesworld.org).

- A “collaborative” workflow, in which you can see others’ transcriptions by clicking on a dropdown menu. You can select a previous transcription, which will pre-populate the text entry field, then you can edit it if necessary (or not at all!) before submitting. Each submission is treated as an individual transcription, even if it is based off another transcriber’s work.

You can see short videos of both workflows in action on this Talk thread.

When we launched the project in January, an initial set of documents was uploaded to both workflows (you can read about how we divided the project data here), to help compare the quality of transcription in each workflow. Gold standard (or “ground truth”) transcriptions were provided by Kathy Griffin, from the Massachusetts Historical Society.

To evaluate data quality between the workflows, we compared the following data:

- Confidence score for individual lines of text in each subject. We used aggregation algorithms to show how much (or little) agreement existed in each line for each subject. We then examined the average rate of agreement between transcriptions in the individual workflow and those in the collaborative workflow.

- Amount of error between each workflow and the ground truth. We measured the Levenshtein distance between subjects from the individual workflow and the ground truth, and between the same subjects from the collaborative workflow and the ground truth, then compared the resulting Character Error Rate (CER).

We also evaluated further metrics, such as rate of retirement (the amount of time it takes for letters to be completely transcribed), time spent classifying, volunteer retention, and depth of engagement (e.g. number of views of the About section, Talk boards, Field Guide, and Tutorial).

We’ll keep sharing updates as we progress through the data.

The intent behind this experiment was to better inform the way that we design and build future crowdsourcing projects on the Zooniverse platform. We kept personally identifiable information anonymous during analysis, and it will not be used in any way in the publication of the outcomes.

We chose not to announce the experiment at the project launch in order to avoid affecting the outcomes. However, when the question arose on the Talk boards (“No red dots?”), we were upfront about the existence of the A/B experiment. We also explained the process in detail on the boards, and in conversations with the project moderators, who provided lots of helpful critique and feedback! This could be confusing at times, but in the end, having a single Talk board for two slightly different workflows made for really interesting conversations. I know from the perspective of a researcher that it was really useful for me, and I’d love to hear more about your experience.

This brings me to the current stage of the project! The initial research question was focused on data quality and volunteer engagement. However, we realized during the first few months that this experiment allowed us a great opportunity to ask you, our volunteer community, about your preferences for the transcription experience. Now that the experiment has ended, we have opened up the transcription interface to allow you to choose whether you prefer to transcribe on your own, or collaboratively. Once you have tried both workflows, we welcome your feedback in the form of participation in a short survey. You are also invited to participate in a public discussion of the workflows on our Talk forum.

Thank you all so much for your participation in the project, and for your patience and willingness to engage in interesting discussions. We have a lot more data waiting in the wings, which we’ll upload as soon as this “Choose Your Own Adventure Workflow” stage is finished in about a month or so. I look forward to reading your feedback and continuing to chat with you all on Talk!

Dr. Samantha Blickhan is the IMLS Postdoctoral Fellow at the Adler Planetarium in Chicago, and the Humanities Lead for Zooniverse. Her current research focuses on crowdsourcing and text, including an examination of individual vs. collaborative transcription methods on the Zooniverse platform.

Dr. Samantha Blickhan is the IMLS Postdoctoral Fellow at the Adler Planetarium in Chicago, and the Humanities Lead for Zooniverse. Her current research focuses on crowdsourcing and text, including an examination of individual vs. collaborative transcription methods on the Zooniverse platform.

Add a comment to: Independent vs. Collaborative Transcription: Zooniverse Research & Anti-Slavery Manuscripts