As you may know, we’ve been hard at work looking over the data from the first round of transcriptions. While there are specific results we’re looking at within this examination (such as the comparison of independent vs collaborative transcription methods one of the most interesting parts of going through the data has been encountering unexpected results. In this blog post, I’ll discuss one unexpected outcome we encountered, and how we plan to deal with it as the Anti-Slavery Manuscripts (ASM) project continues.

ASM annotations: a quick overview

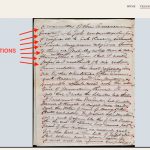

While the ultimate goal of ASM is to transcribe text, the act of transcribing relies on another task: annotation. In this project, the annotations are the dots and connecting lines that volunteers use to underline a piece of text on a digital image of a letter, and seen in the screenshot below. To enlarge any image in this post, please click on it.

When we wrote the project tutorial, we instructed volunteers to “start at the beginning of a line of text.” This instruction did not include the explicit requirement of starting on a particular physical side of the page (i.e. “left” or “right”).This is because we knew that the dataset contained multidirectional text, including perpendicular text written in the margins, as well as cross-writing. However, because we did not explicitly ask our volunteers to place annotations at the start of the line in the direction of writing, we noticed in the resulting data that many volunteers placed their annotations from right to left.

Why does annotation direction matter?

At this point, you may be asking yourself: who cares? Why does annotation direction even matter in a transcription project?

This is a very good question. Annotation direction matters because it affects the way that lines are aggregated on the image. In a previous blog post), Zooniverse data scientist Coleman Krawczyk explained how the process of aggregation annotations works for ASM. As Coleman noted in that post, one of the first steps in the process of ‘clustering’—or grouping together all the annotations for the same line of text—is to identify the slope of the lines. Slope is determined by comparing the coordinates of two points on a line: (x1, y1) and (x2, y2). In the case of ASM annotations, (x1,y1) refers to the first dot placed on the image, and (x2, y2) is the second. Lines that are drawn from left to right will therefore have a different slope than those drawn from right to left, even if they are the same line. The lines with the same slope are clustered together and assumed to be annotations for the same written line of text.

To illustrate why annotations that don’t follow the direction of the text create a problem for this project, let’s look at some ASM data.

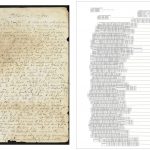

The image above shows the first page of a letter that was transcribed in the Collaborative workflow. The left side shows the digitized letter with annotations, and the right side shows the typed transcriptions, clustered by line and segmented by word (if you zoom in, you’ll see that the transcriptions are aligned vertically, with individual boxes drawn around each word in the text for easy comparison). On the annotations, the filled-in blue dots indicate the starting point (i.e. the first dot that was placed), and the empty dots indicate the second point. Notice how, because the annotations for this letter all began on the left-hand side of the page, the transcriptions are all aligned to the left.

This example is also from the Collaborative workflow, but the volunteer who annotated the document frequently began their annotations on the right, rather than on the left. Notice the presence of filled-in blue dots on the right side of the page. This choice may have been due to to the presence of marginal text on the left-hand side of the page. However, it may have also been personal preference, as the same behavior appears in the annotations of the perpendicular text in the margins, as well as the second page of the letter. Looking at the right side of the image above, you can see a visual representation of how the transcription data is rendered when associated with images that start on the opposite side of the page: they are right-aligned, but also upside-down. Even though the annotations started on the right side of the page, the system still interprets them as having been written left to right. This means the text would be flipped 180 degrees, appearing upside-down.

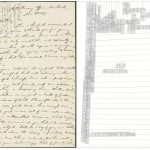

The final example is from the independent workflow. The difference between this example and the examples from the collaborative workflow is that, if you look at the annotations on the original image, you’ll notice that many of them feature two sets of dots which seem to be on the same line. In fact, these are annotations which, though very closely matching in terms of line placement, will not aggregate together because their slope is calculated differently due to the direction in which they were drawn. Because they won’t aggregate together, these transcriptions won’t count toward the aggregation for the individual line. This can result in over-classification of a document, in which more people transcribe a single line of text than is necessary to achieve consensus. Looking at the example above, you can see that some lines features as many as 6 transcriptions.

Outcomes & next steps for the project

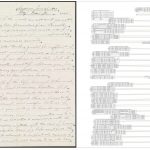

We’ve taken a few steps to work against this problem continuing into the transcription of the next dataset. The first is through communication of best practices (one of which is this blog post!): we’ve updated the tutorial to mention the importance of annotating in the direction of the text. We didn’t want to put any restrictions on the annotation tool, since there are cases in which people will need to annotate in other directions. The example below shows a page of a letter which would require volunteers to annotate in multiple directions:

In conversations with our web development team, we wondered whether we could restrict the way that the annotation tools are used in the project. However, because letters with multidirectional text are so common in this project, we concluded that it would be unwise to place restrictions on the way that the tool can be used. Therefore, communication is one of the only tools we have to avoid seeing similar results in future datasets, both for Anti-Slavery Manuscripts and for other projects that make use of the same kind of annotation tools.

While this adds a step to our data cleaning process, it’s a great addition to our collective knowledge about how volunteers engage with the annotation/transcription interface. We’ve been thinking over a long-term solutions for this problem as well, including applying the filled-in/not-filled-in dots design to the transcription interface so that volunteers can choose to re-draw lines that are incorrectly annotated. However, we don’t have any current plans to build that functionality into ASM. Most importantly, this is a reminder that we need to design projects that can work with a variety of volunteer behaviors, and shouldn’t presume that our entire community will approach a task in the same manner.

Dr. Samantha Blickhan is the IMLS Postdoctoral Fellow at the Adler Planetarium in Chicago, and the Humanities Lead for Zooniverse. Her current research focuses on crowdsourcing and text, including an examination of individual vs. collaborative transcription methods on the Zooniverse platform.

Dr. Samantha Blickhan is the IMLS Postdoctoral Fellow at the Adler Planetarium in Chicago, and the Humanities Lead for Zooniverse. Her current research focuses on crowdsourcing and text, including an examination of individual vs. collaborative transcription methods on the Zooniverse platform.

Add a comment to: Why Annotation Direction Is Important